Photo by Papamakarios, Comparison of Modern Stochastic Optimization Algorithms, 2014

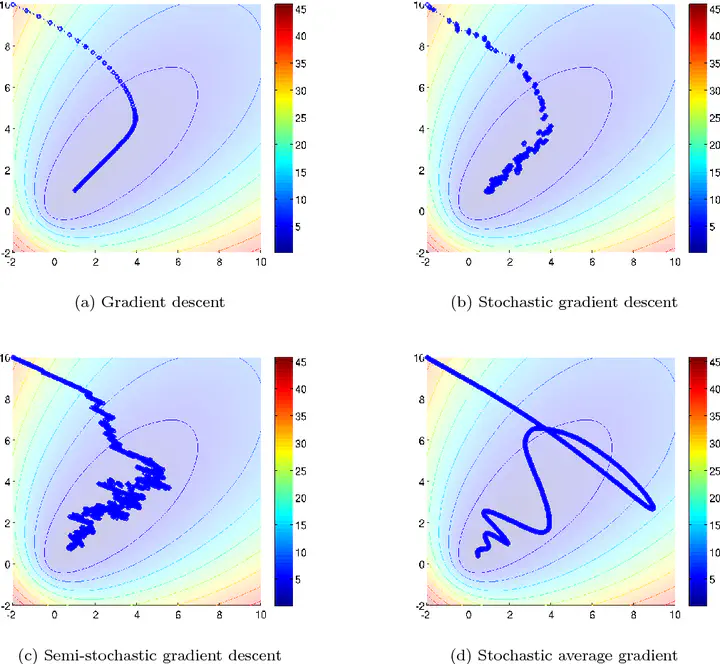

Photo by Papamakarios, Comparison of Modern Stochastic Optimization Algorithms, 2014To solve the image reconstruction problem in the high-dimensional setting, numerous first order solvers which leverage random numerical linear algebra have been deployed in order to reduce the per-iteration computational cost in different machine learning and imaging applications. Well established algorithms like Stochastic Gradient Descent (SGD), order subsets and Alternating Direction Method of Multiplier (ADMM) have gained considerably attention in spectral CT [22, 23]. Unfortunately, the iteration complexity of first order methods relies significantly on the condition number of the problem like SGD which has a sub-linear convergence rate to only a neighborhood of the solution. Instead, the information related to the curvature is decisive to improve the convergence by reducing the condition number and to obtain physical meaningful and accurate quantitative estimation.

Therefore, accounting for a greedy selection of parameters and step-size is crucial to design first-order algorithms for imaging like CT image reconstruction. Deterministic second order methods for solving regularized optimization problems with Generalized Linear Models (GLM) have been widely studied but despite the superior, linear or super-linear, convergence rate of Newton methods compared to first order methods one weakness relies on the high computational cost of calculating the Hessian matrix in high dimensional imaging application.

In this project, a new family of second-order algorithm with application to statistical iterative material decomposition is developed which enjoys an improved accuracy and computation trade-off. We propose a computational efficient quantitative estimation with second order and parameters-free methods based on randomized linear algebra and regularization induced by denoising. We exploit the recent development of randomized sketches and sub-sampling methods for the Hessian matrix to improve upon the computational cost-per-iteration.